Prompt Hacking and Misuse of LLMs

Large Language Models can craft poetry, answer queries, and even write code. Yet, with immense power comes inherent risks. The same prompts that enable LLMs to engage in meaningful dialogue can be manipulated with malicious intent. Hacking, misuse, and a lack of comprehensive security protocols can turn these marvels of technology into tools of deception.

What is Prompt Hacking and its Growing Importance in AI Security

hackers: Hackers trick AI with 'bad math' to expose its flaws and biases - The Economic Times

What is Prompt Hacking and its Growing Importance in AI Security

Jailbreaking Large Language Models: Techniques, Examples, Prevention Methods

Prompt Hacking and Misuse of LLMs

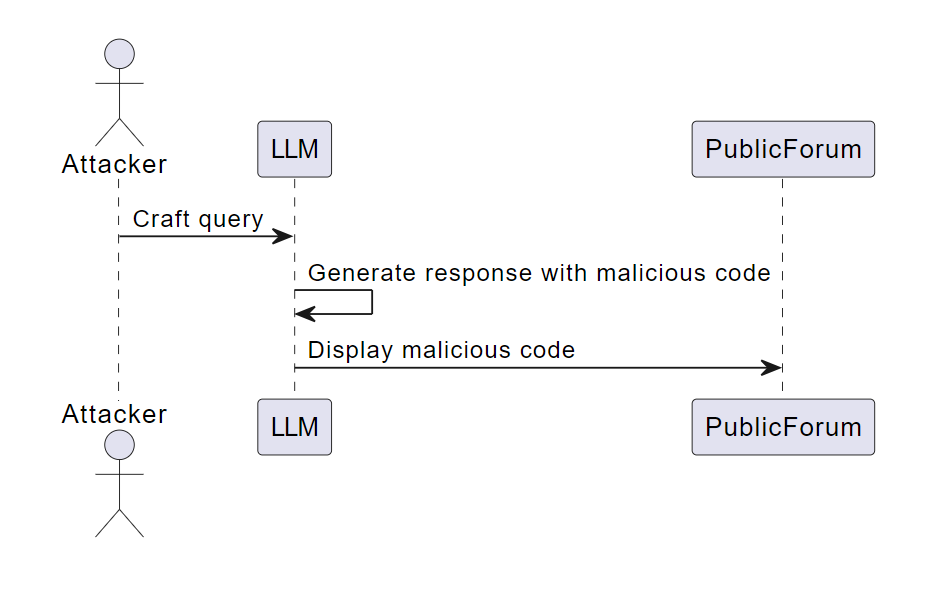

LLM Security Guide - Understanding the Risks of Prompt Injections and Other Attacks on Large Language Models : Artificial Intelligence and MLOps Consulting company focused on increasing revenue.

OWASP Top 10 for LLMs: An Overview with SOCRadar

Harnessing the Dual LLM Pattern for Prompt Security with MindsDB - DEV Community

Towards Trusted AI Week 21 – Risks of Prompt Injection Exploits Revealed

The Dangers of AI-Enhanced Hacking Techniques

OWASP Top 10 for Large Language Model Applications Explained: A Practical Guide